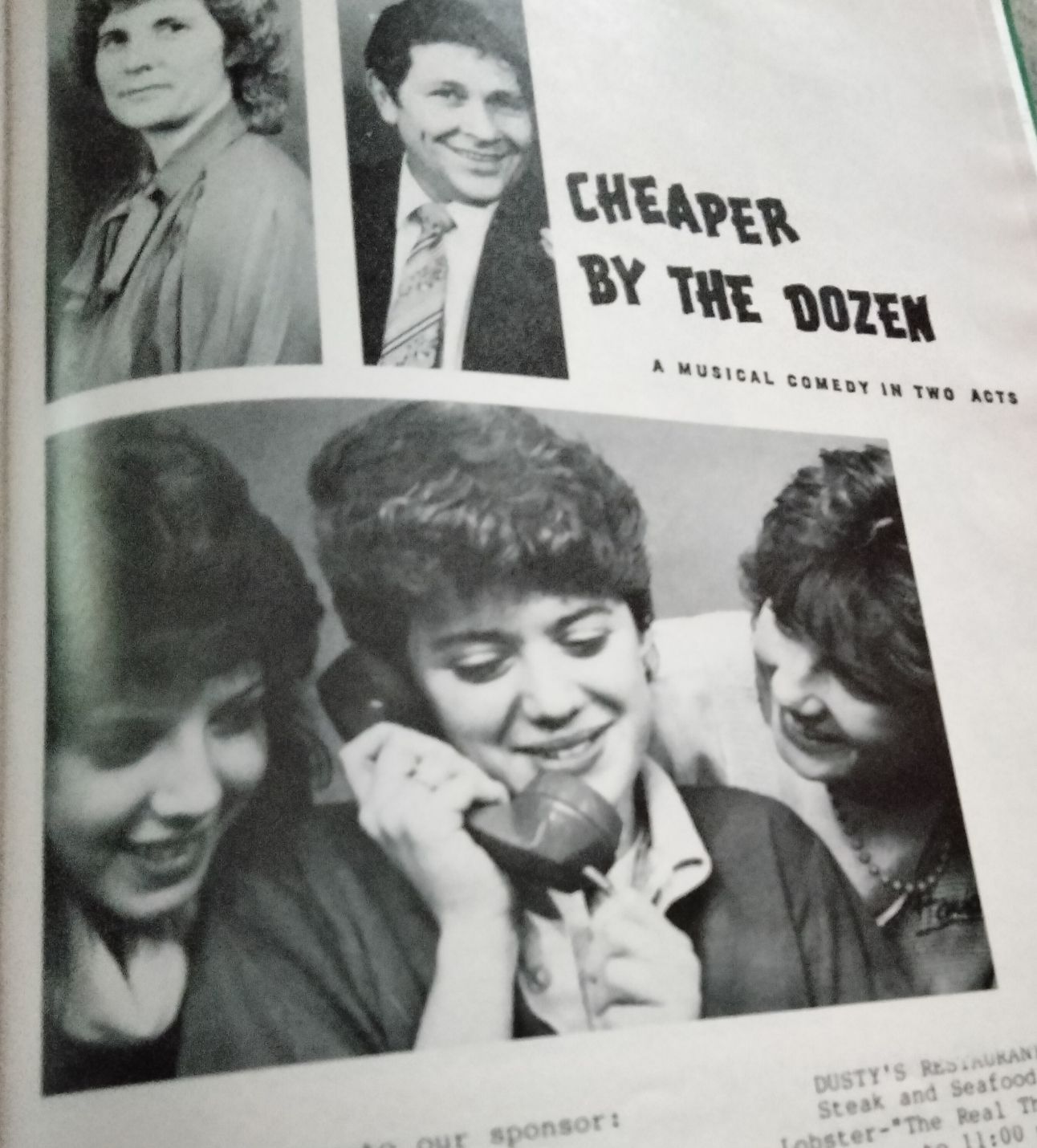

Linda Berberich, PhD - Founder and Chief Learning Architect, Linda B. Learning, headshot from her UVIC Phoenix Theatre days circa 1988

Hi, I’m Linda. Thanks so much for checking out the March 2025 edition of my Linda Be Learning newsletter. If you are just discovering me, I encourage you to check out my website and my YouTube channel to learn more about the work I do in the field of learning technology and innovation.

If you’ve been following my newsletter, you know that I introduced themes in January 2025, looking at specific technologies and their intersections with human learning. This month, I am revisiting one of my greatest passions from my youth, and that is theatre and the performing arts. I started acting when I was five years old, wrote my very first computer-based learning program on an Apple IIE when I was 14 to help me memorize lines, and got into uni on a theatre scholarship.

In this month’s edition, we’ll explore VR and AI technologies being implemented in the performing arts in ways that BENEFIT performers, instead of simply extracting from them.

Tech to Get Excited About

I am always discovering and exploring new tech. It’s usually:

recent developments in tech I have worked on in the past,

tech I am actively using myself for projects,

tech I am researching for competitive analysis or other purposes, and/or

my client’s tech.

This month, we’re going to look at an immersive technology used for theatre experiences.

L to R: June (March Hare), Lorna (Doormouse) and Linda (Mad Hatter) rehearsing the Mad Tea Party scene from Alice in Wonderland circa 1979

Second Theatre

Second Theatre is on a mission to make live theatre, a three-thousand-year-old art form, more popular. The idea is not to replace in-person theatre but to create a virtual "Second Theatre" to bring theatre to those who missed ticket sales, don’t live in the area or who can't attend for other reasons. Berlin, for example, is famous for its theatres, but for many theatre fans, it's too far away and in a language they don’t understand. Second Theatre overcomes those barriers.

Second Theatre's founder Erkki Izarra got the idea in 2015 when he worked as a global director at Microsoft. He had the opportunity to try an early VR experience and his first thought was, "Hey, this could be the way to relive theatre."

He gathered a team of VR pros and created a demo in KOM Theatre viewed using a Varjo headset. His mind was blown and he still gets goosebumps every time he tells how they fired up that first demo in Varjo's first office.

Erkki was inspired by his mother and sister both being theatre directors. Today, his sister still writes and directs plays – and curates content for Second Theatre.

Second Theatre creates new audiences for theatres, records performances for future generations, and only requires a VR headset, such as Meta Quest 2 or 3 or Apple Vision Pro, to view performances.

Download the app and experience it for yourself!

Second Theatre VR app experience from a production of Hairdressers

Technology for Good

VR is not the only technology being leveraged by the performance arts. AI is also another hot area, particularily in the area of music education. This excerpt from a recent study highlights both the advantages and disadvantages of using AI for this purpose:

Artificial intelligence (AI) is opening new horizons in music education and composition (Lv, 2023). The integration of AI-based tools is transforming educational approaches, fostering creativity and innovation in choral arrangements (Memarian & Doleck, 2024). AI algorithms analyze large volumes of musical data, generating original compositions, suggesting harmonizations, and adapting existing works for different voices (Hernandez-Olivan & Beltran, 2022). This capability is precious in choral music, where the interaction of voices and harmonies forms the core of the musical experience (Grebenuk et al., 2021). One of the significant outcomes of AI implementation is the democratization of musical composition. The creation of choral music traditionally demanded extensive knowledge of music theory and harmonization (Doush & Sawalha, 2020). However, AI has streamlined this process, allowing for the effortless composition of intricate and harmonious pieces (Lv, 2023). Furthermore, AI expands the creative boundaries for composers and educators by offering new harmonies and vocal distributions that may not be immediately apparent even to experienced composers (Anantrasirichai & Bull, 2022). This, in turn, opens up new opportunities for experimentation and inspiration, which is especially beneficial for educators (Miranda, 2021). Additionally, AI provides opportunities for personalized learning by adapting compositions to specific vocal ranges and abilities of choirs (Lokare & Jadhav, 2024; Zhang & Song, 2023). However, despite these advantages, the integration of AI in choral education carries the risk of losing traditional composition skills, necessitating a strong foundation in music theory (Laidlow, 2023; Wei et al., 2022). Ethical concerns also arise regarding authorship and the originality of AI-generated works, presenting new dilemmas for educators and the music industry as a whole (Clancy, 2021).

A secondary school musical where we sang in multiple languages, circa 1984

AI is not limited to vocals and choirs; it also can greatly benefit music bands by enhancing instrument learning, assisting in composition and arrangement, and even improving live performances. This blog post takes a deeper dive into the potential of AI in school choirs and music bands, and how it can enhance the learning experience for students.

But what tools are they using? Let’s look at that tech.

Kits.ai

Kits.ai is the tech that was used in the study highlighted at the beginning of this segment, studio-quality AI audio tools that clone voices, sing like anyone, play any instrument, and isolate vocals, all 100% royalty free. Founded in 2024, their research division solves the hardest problems in AI audio, including generative music, singing voice conversion, and singing voice synthesis to create studio-quality, ethical AI tools. Their core products include voice cloning, an AI voice library, and Kits Earn, a voice model opportunity that enables artists to turn their unique voices into passive income. Artists create a verified model of their vocals and earn every time someone downloads the output.

This recent blog post describes how the author used Kits.ai to create his own choir. But there’s nothing like hands-on experience, and you can try out the tech for free in your browser.

Tech Retrospective: Tupac at Coachella

If you know me personally, you know that Tupac Shakur will always hold a special place in my heart. I’ve been a massive fan of his music and poetry from way back in the Brenda’s Got A Baby days, before I even moved to the United States.

Fast forward to 2012. I now live in the greater Seattle area, work in the tech industry, and had the opportunity to witness in real time the “resurrection” of Tupac at Coachella. If you’ve not seen it at all or in awhile, you can check out that performance below, and yeah, here’s the obligatory explicit lyrics warning.

For those of you not familiar with it, the Coachella Valley Music and Arts Festival is a two-weekend music and arts festival that takes place in Indio, California, near Palm Springs. It's considered one of the world's top music festivals, and features a diverse lineup of artists from many genres, large art installations and sculptures, culinary and drink experiences, and multiple stages hosting live music.

Coachella 2012 featured Tupac reunited with Dr. Dre and Snoop Dogg, performing on April 15, 2012 or Day 3 (Sunday night) of the festival, as well as making a reappearance on closing night, over 15 years after Tupac had passed. In fact, Coachella itself didn’t even exist as a festival until 3 years after his death (which makes the part where he says “What’s up, Coachella?” even that much eerier). The performance itself was so well done, especially for the time, that when the video of it inevitably went viral, people began to question whether Tupac’s passing had been a hoax and he’d simply been in hiding all along. If that had been the case, he’d certainly aged well.

Many accounts refer to the virtual being they saw on that stage as a hologram, but technically, it’s a projection, not a hologram. Holograms are light-beam-produced, three-dimensional images visible to the naked eye. Even though he appeared to be 3D, the Tupac virtual being was a two-dimensional projection that employed a theatrical technique that’s been around for more than 430 years, a variation of an old effect called Pepper's Ghost. The technique was first used onstage in 1862 for a dramatization of Charles Dickens' The Haunted Man and the Ghost's Bargain, employing an angled piece of glass to reflect a "ghostly" image of an offstage actor.

Virtual Tupac was a fully digital image created by Digital Domain Media Group (DDMG), who are responsible for the innovative visuals in more than 80 major motion pictures and hundreds of commercials, including Thor, Tron, The Curious Case of Benjamin Button, Star Trek and their Academy Award winning work in Titanic. What the audience saw was not found or archival footage of Tupac, but a very believable and kind of creepy illusion that was projected onto a Mylar screen onstage. It took AV Concepts, the company responsible for the live projection, several months of planning and four months of studio time to create. The most critical technical element was AV Concepts' proprietary Liquid Scenic server that delivered uncompressed media for 3 stacked 1920 x 1080 images, delivering 54,000 lumens of incredibly clear projected imagery. AV Concepts has used similar visual technology before for Madonna, the Gorillaz, Celine Dion, and the Black Eyed Peas, as well as resurrecting dead CEOs for corporate events.

Dr. Dre was the one who came up with and funded the idea. He and his production team also were responsible for working with Tupac’s estate and handling the legal ramifications of using his likeness, which required the approval and blessing of his mother, Afeni Shakur. The performance was shocking and unsettling, especially when Snoop Dogg and Dre interacted with Virtual Tupac as if he were a real person. But it also was imaginative and awesome, the highlight of that year's Coachella festival, and there was even some talk about taking Virtual Tupac on tour. DDMG went on to win the prestigious Cannes Lions Titanium Award later that year, in June 2012, for the most groundbreaking work in the creative communications field.

Originally it was said that Dr. Dre had a massive vision, and some people were thinking they should prepare for a trend of reanimating dead celebrities for live performances. At the time, it seemed like it could be a signicant windfall for the estates of deceased performers, as well as a means for reunion tours for bands with a deceased member. So what happened?

Check out this article for a deeper dive into the making of this phenomenal spectacle and its legacy.

Extra bonus: here’s an MTV interview with Tupac where he shares his thoughts on Donald Trump and greed — from 1992!!!! Yep, the man was a poet and a prophet.

Learning Theory and Learning Technology

Performing arts demand a significant amount of rehearsal and memorization, requiring students to learn specific, correct responses in a designated order and cadence. Lines in a script need to be said with the correct words at the correct time. The same concepts apply to choir and band. And unless you are performing a monologue or solo, you also need to know how to react, respond, and be a part of a greater whole, requiring both individual and group preparation.

My first computer-based learning program I wrote as a teenager helped me memorize my lines by prompting me with my cues, providing me with opportunities to respond, and then giving me feedback on my responses. The lines that preceded my character’s lines were coded and presented as prompts. Because voice recognition and voice synthesis technologies were not readily available on Apple IIEs, responses were typed, with some leeway for spelling, capitalization and grammatical variations that would be considered correct responses when spoken. If the line I typed was correct, the dialogue between characters continued. If the line was incorrect, I was given that feedback and the opportunity to try two more times before the correct answer was given.

The Apple IIE circa 1983

The actions of feeding in the theatrical scripts, hard coding the prompts and feedback (none of this is necessary with today’s modern technology), and rehearsing with the program multiple times made memorizing lines even faster with my computer program compared to running lines with other people. I replicated these results later on in the early stages of my tech career (circa 2001), where I empirically demonstrated that computer-based feedback can be more accurate and more effective in peer practice situations compared to feedback provided by human peer practice partners. I learned this and incorporated it into the design of a learning program I created for Apex Learning that taught primary school teachers how to evaluate oral reading fluency in children.

Nowadays, modern VR and AI technologies provide great potential for helping performing arts students prepare for auditions, practice their role or part independently once they’ve been cast, rehearse as a group even when physically dispersed, and eventually perform before live and virtual audiences. Voice recognition and voice synthesis technologies have come a long way and are much more reliable inputs now, and faster processing speeds have corrected for lag issues that previously had been barriers to widespread use of these technologies.

But it’s not just about the tech. In order to be effective, students not only need to produce responses, they also need to receive feedback on those responses, both feedback that occurs naturally in the environment (e.g., the sound emitted by an instrument) and feedback provided by others, such as other performers, the conductor, or the director. The feedback needs to reinforce the correct behaviors as well as provide corrective feedback to shape behavior when incorrect responses are made. Designing effective feedback — the timing and the substance of it— is a necessary instructional design skill that is based in behavioral psychology, not computer science or engineering. That’s the part that excites me, as well as the ability to bring together geographically dispersed performers to perform as one, virtually.

Upcoming Learning Offerings

Instead of extracting from artists, how can technology instead HELP artists, and specifically, performance artists like singers and thespians? This is the question I'm currently exploring with Edward Prentice III through the VR platform he created with his company, YEP Nation Inc.

YEP Nation Audition Studio VR Platform

Ed has already started working in VR with choral groups, and I introduced the concept of Virtual Theatre School, a VR platform where students can audition, practice, and perform, all while honing their acting, stagecraft, and design skills. We're working on those prototypes now, and I can't wait to show them to you.

To get early access to our upcoming demo and learning sessions, add yourself to our waitlist.

Other YEP Nation Immersive Platforms

If you missed last month’s live learning session on peer learning, you can catch excerpts from it on my YouTube channel.

That’s all for now. I hope the tech you learned about in this month’s edition has inspired you, in terms of the potential for both performers and fans.

See you next month!

Sherry and Linda miming The Night Before Christmas circa 1985